Indice

OpenMP

Tutorials, external links

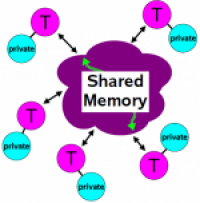

What is openMP

OpenMP adds constructs for shared-memory threading to C, C++ and Fortran

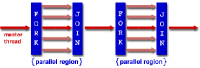

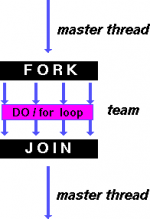

Fork–Join Model:

Consists of Compiler directives, runtime routines and environment variables

Version 4.0 (July 2013), 4.5 (Nov 2015 ) and 5.0 (Nov 2018) add support for accelerators (target directives), vectorization (SIMD directives), thread affinity and cancellation.

openMP support in the C/C++ Compilers

https://www.openmp.org/resources/openmp-compilers-tools/

GCC: From GCC 6.1, OpenMP 4.5 is fully supported for C and C++

echo |cpp -fopenmp -dM |grep -i open #define _OPENMP 201511

Go to openMP Specifications to discover the mapping between the date provided and the actual OpenMP version number

How to compile with openMP library

Compile with -fopenmp on Linux

Execution model

Begin execution as a single process (master thread)

Start of a parallel construct (using special directives): Master thread creates team of threads

Fork-join model of parallel execution

Execution Example

program ex1.c

#include <omp.h>

#include <stdio.h>

main() {

int var1, var2, var3;

//Serial code executed by master thread

#pragma omp parallel private(var1, var2) shared(var3) //openMP directive

{

// Parallel section executed by all threads

printf("hello from %d of %d\n", omp_get_thread_num(), omp_get_num_threads());

// omp_get_thread_num() and omp_get_num_threads() are openMP routines

}

// Resume serial code executed by master thread

}

Run gcc with the -fopenmp flag:

gcc -O2 -fopenmp ex1.c

Beware: If you forget -fopenmp, then all OpenMP directives are ignored!

How Many Threads?

The default threads number is the number of processors of the node (see /proc/cpuinfo)

To control the number of threads used to run an OpenMP program, set the OMP_NUM_THREADS environment variable:

% ./a.out hello from 0 of 2 hello from 1 of 2 % env OMP_NUM_THREADS=3 ./a.out hello from 2 of 3 hello from 0 of 3 hello from 1 of 3

The threads number can be imposed with a openMP routine:

omp_set_num_threads(4);

OpenMP variables

Variables outside a parallel are shared, and variables inside a parallel are private (allocated in the thread stack)

Programmers can modify this default through the private() and shared() clauses:

Program ex2.c

#include <omp.h>

#include <stdio.h>

int main() {

int t, j, i;

#pragma omp parallel private(t, i) shared(j)

{

t = omp_get_thread_num();

printf("running %d\n", t);

for (i = 0; i < 1000000; i++)

j++; /* race! */

printf("ran %d\n", t);

}

printf("%d\n", j);

}

gcc -O2 -fopenmp ex2.c

./a.out

It is the programmer's responsibility to ensure that multiple threads properly access SHARED variables (such as via CRITICAL sections)

OpenMP timing

Elapsed wall clock time can be taken using omp_get_wtime()

Program ex3.c

#include <omp.h>

#include <iostream>

#include <unistd.h>

using namespace std;

int main() {

double t1,t2;

cout << "Start timer" << endl;

t1=omp_get_wtime();

// Do something long

sleep(2);

t2=omp_get_wtime();

cout << t2-t1 << endl;

}

g++ ex3.c -fopenmp

./a.out

OpenMP Directives

Main directives are of 2 types:

- Fork: PARALLEL FOR SECTION SINGLE MASTER CRITICAL

- BARRIER

Syntax

#pragma omp <directive-name> [clause, ..]

{

// parallelized region

}

//implicit synchronization

#pragma omp barrier //explicit synchronization

Parallel directive

A parallel region is a block of code that will be executed by multiple threads. This is the fundamental OpenMP parallel construct.

Examples: ex1.c ex2.c parallel-single.c

For directive

The for workshare directive

- requires that the following statement is a for loop

- makes the loop index private to each thread

- runs a subset of iterations in each thread

#pragma omp parallel

#pragma omp for

for (i = 0; i < 5; i++)

printf("hello from %d at %d\n", omp_get_thread_num(), i);

Or use #pragma omp parallel for

Examples: for.c for-schelule.c

Single and Master directives

The SINGLE directive specifies that the enclosed code is to be executed by only one thread in the team.

The MASTER directive specifies a region that is to be executed only by the master thread of the team. All other threads on the team skip this section of code.

Examples: parallel-single.c

Sections Directive

A sections workshare directive is followed by a block that has section directives, one per task

#pragma omp parallel

#pragma omp sections

{

#pragma omp section

printf("Task A: %d\n", omp_get_thread_num());

#pragma omp section

printf("Task B: %d\n", omp_get_thread_num());

#pragma omp section

printf("Task C: %d\n", omp_get_thread_num());

}

There is an implied barrier at the end of a SECTIONS directive

Examples: sections.c

Critical Directive

The CRITICAL directive specifies a region of code that must be executed by only one thread at a time.

Example:

#include <omp.h>

main()

{

int x;

x = 0;

#pragma omp parallel shared(x)

{

#pragma omp critical

x = x + 1;

} /* end of parallel section */

}

Examples: ex2.c

Main clauses

Reduce Clause

The reduction clause of parallel

- makes the specified variable private to each thread

- combines private results on exit

int t;

#pragma omp parallel reduction(+:t)

{

t = omp_get_thread_num() + 1;

printf("local %d\n", t);

}

printf("reduction %d\n", t);

Examples: reduction.c

Combining FOR and reduce

int array[8] = { 1, 1, 1, 1, 1, 1, 1, 1};

int sum=0, i;

#pragma omp parallel for reduction(+:sum)

for (i = 0; i < 8; i++) {

sum += array[i];

}

printf("total %d\n", sum);

Schedule clause

Using just

#pragma omp for

leaves the decision of data allocation up to the compiler

When you want to specify it yourself, use schedule:

#pragma omp for schedule(....)

Examples: for-schedule.c